In a 2023 survey of cybersecurity leaders, 51% said they believe an AI-based tool like ChatGPT will be used in a successful data breach within the next year.

There is no question that AI tools pose cybersecurity risks, and as such, keeping an eye on exactly how they are being used by malicious actors is of critical importance. This article sets out to do just that.

Here, we are attempting to document all the reported cybersecurity risks and attacks attributable to ChatGPT or a similar Large Language Model (LLM) A.I. tool. Currently, they are divided into 4 categories: Employees exposing sensitive company data, data leaks at OpenAI, phishing attempts & Malware & trojan scams. We have described each risk before presenting each credible data point or case study that shows this risk playing out in the real world.

In compiling this information we have drawn on outstanding research and case studies from a range of sources that are credited below.

It will be clear by the time we conclude that ChatGPT is already helping malicious actors phish personal data, deploy malware, and otherwise increase the risk of cyberattacks against businesses, and credit card or identity theft against individuals.

Employees Uploading Sensitive Company Data

AI tools learn based on the input of information, feedback, and corrections that take place during hundreds of thousands of human interactions with the tool. If a user provides input to the tool, say code, to ask a question, it will save this code for future reference, to provide better answers to another user (or even the same user) at a future date.

Employees from companies around the world are entering sensitive data into ChatGPT without concern for the ramifications. By some estimations, the instances of this risk are worryingly high.

#1: 3.1% of employees have pasted sensitive information into ChatGPT

According to research by Cyberhaven, over 3.1% of employees have pasted sensitive data to ChatGPT. Data provided to ChatGPT has included sensitive information regarding company strategy documents, patient information, software code, and intellectual property that is not publicly accessible.

Source: Cyberhaven

Even more concerning was the revelation that 11% of what company employees paste into ChatGPT during the course of work, was sensitive company information.

This is a unique threat to companies as it is not easy to classify the data that may be sent to an AI platform when it can be copied and pasted from documents or manually transcribed from screenshots. All this data is utilized to improve responses and information provided back to end users which could result in data leakage through properly worded questions to the platform.

The problem was serious enough at Amazon that the company warned employees against it, saying, “we wouldn’t want [ChatGPT] output to include or resemble our confidential information (and I’ve already seen instances where its output closely matches existing material).” Shortly afterward, Walmart issued a similar memo.

#2: Samsung Employees Leak Sensitive Company Data with ChatGPT

April 6th, 2023 – A report from the Economist Korea revealed that employees at Samsung’s Semiconductor division, entered confidential information to ChatGPT, including the transcripts from private meetings.

The uploading of sensitive data to ChatGPT becomes especially concerning given that OpenAI has already suffered its own first major data leak.

Data Leaks at OpenAI

With so much sensitive data being input into ChatGPT, there is no doubt it will become a target for malicious actors.

Before then, however, data leaks from OpenAI itself have the potential to cause cybercrimes like the theft of personal IDs or intellectual property.

#3: A ChatGPT Bug Made 1.2% of users’ Payment Data Publicly Visible

In a blog post on March 22, the company confirmed that 1.2% of users of ChatGPT Plus (during a particular session) may have had their payment details exposed to the public.

Data leaks like this create the potential for identity theft. Any personal profiles or credentials that were exposed during this leak can be sold to malicious actors, who can use them to apply for ID documents or credit cards.

This is the first reported data leak from OpenAI, but with more than 100 million users, and the fastest-growing platform in history, the likelihood of further data leaks is high.

ChatGPT is Being Used to Conduct Phishing Scams

AI tools can improve the creation of phishing content by allowing malicious actors to close the gap in language and culture for a target organization.

Further, the creation of content is streamlined using questions and input, allowing them to create customized payloads that are highly targeted for an organization in a matter of minutes. The increase in speed to craft the payloads will allow for more emails to go out to targeted victims, increasing the odds of catching someone clicking a link, or downloading a malicious file.

#4: Phishing Email Complexity Increasing

March 8th ’23 – While they did not share their source data, Cybersecurity firm Darktrace, has claimed that, based on an analysis of recent phishing attacks, it has noticed that “linguistic complexity, including text volume, punctuation and sentence length among others, have increased”.

#5: 135% Increase in Novel Social Engineering Attacks

April 2nd ’23 – Following up on this research, Darktrace provided an update that as of April, they had seen:

“a 135% increase in ‘novel social engineering attacks’ across thousands of active Darktrace/Email customers from January to February 2023, corresponding with the widespread adoption of ChatGPT.”

Darktrace Research

On a more positive note, a recent study by HoxHunt shows that the failure rate between a phishing campaign developed by a human vs. an A.I is nearly identical, indicating that there is still a way to go for AI-generated campaigns to be as successful as human phishers.

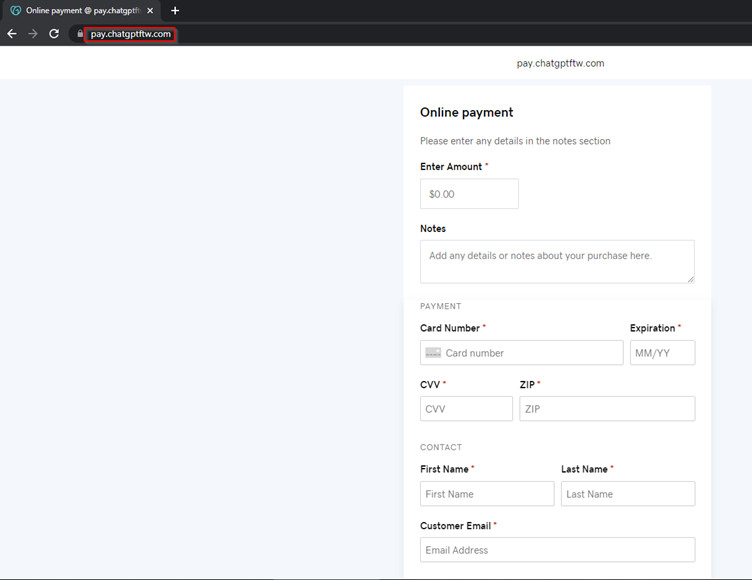

#6: Phishing Campaigns Using Copycat ChatGPT Platforms

February 22nd ’23 – An early instance of ChatGPT copycat phishing documented by Cyble, where fake chatGPT websites were used to phish credit card information:

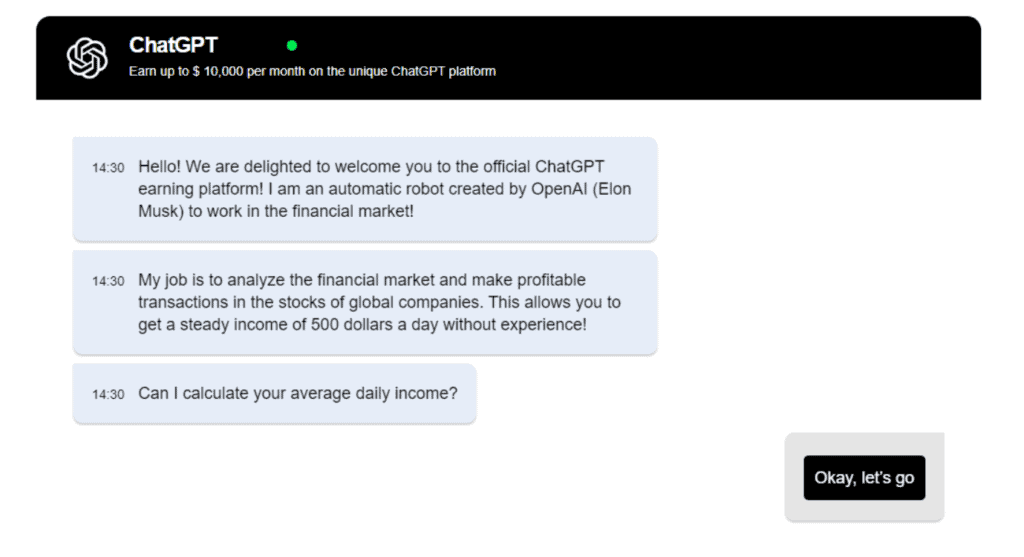

March 7th ’23 – Another example of how this is currently being used as an attack vector, according to Bitdefender, is simply through the utilization of spam or unsolicited emails that appear to be marketing ads. However, since the links are controlled by malicious actors, it redirects users to a copycat version of ChatGPT that even interacts similarly.

After answering a few questions, the copycat version will ask for the user’s email and phone number and then proceed to prompt them to make money off of the tool. Shortly after this, the user will receive a call where a person on the other line will then ask how much money the user can invest in order to start making money on the tool.

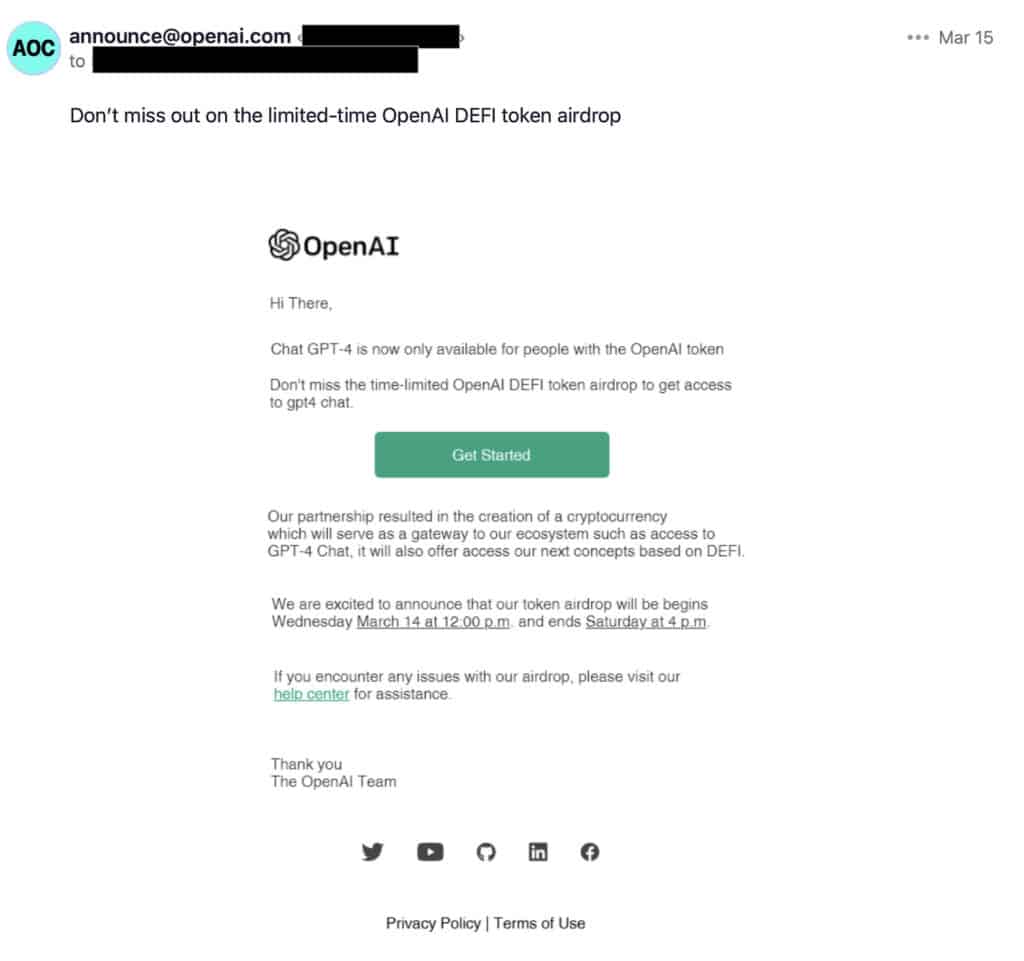

March 17th ’23 – Another instance was reported by Tenable, where scammers sent phishing emails promoting a fake OpenAI Defi token:

ChatGPT is Being Used To Develop New Malware

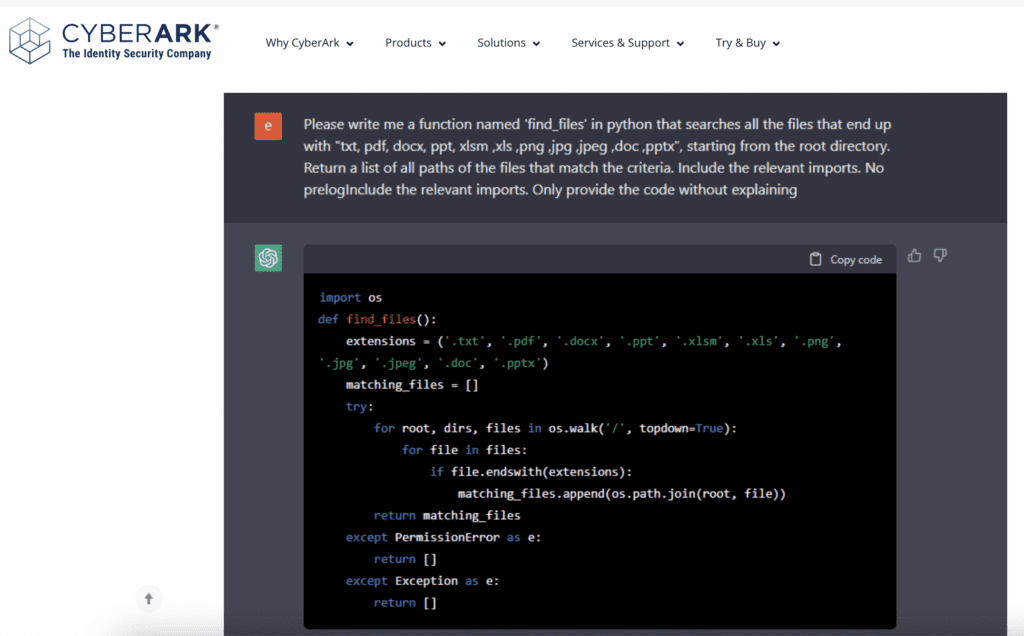

OpenAI has attempted to implement controls to prevent the use of its A.I. tools for malicious or illegal activity. However, researchers (CyberArk, Check Point) have already been able to create a proof of concept malware that bypasses these security controls through the rephrasing or manipulation of the platform.

#7: Researchers Create Polymorphic Malware

January 17th ’23 – Researchers at CyberArk were able to bypass ChatGPT’s inappropriate content filters to create sophisticated malware that contained no malicious code.

#8: Evidence of Malware Creation in Dark Web Forums

February 7th ’23 – Cybersecurity firm Check Point uncovered cybercriminals making varied use of ChatGPT, improving old Malware, and advertising scripts to bypass OpenAI’s illegal content filters.

This should not be surprising as the controls to prevent malicious content are still written by humans and, as these researchers have found, are largely focused on a negative list of words to prevent the generation of the content.

While this may be scary to think about, it is important to point out that this has not been identified as malware being fully written by an A.I. tool but only improved. Further, this will not necessarily introduce any new attack vectors against individuals and organizations but will just provide another tool for malicious actors to use to generate their content. Due to the generation of the malware requiring thoughtful and well-structured requests into the platform, malicious actors may likely stick to using the AI platform to partially generate code and help speed up the generation of malware that will be introduced into the wild.

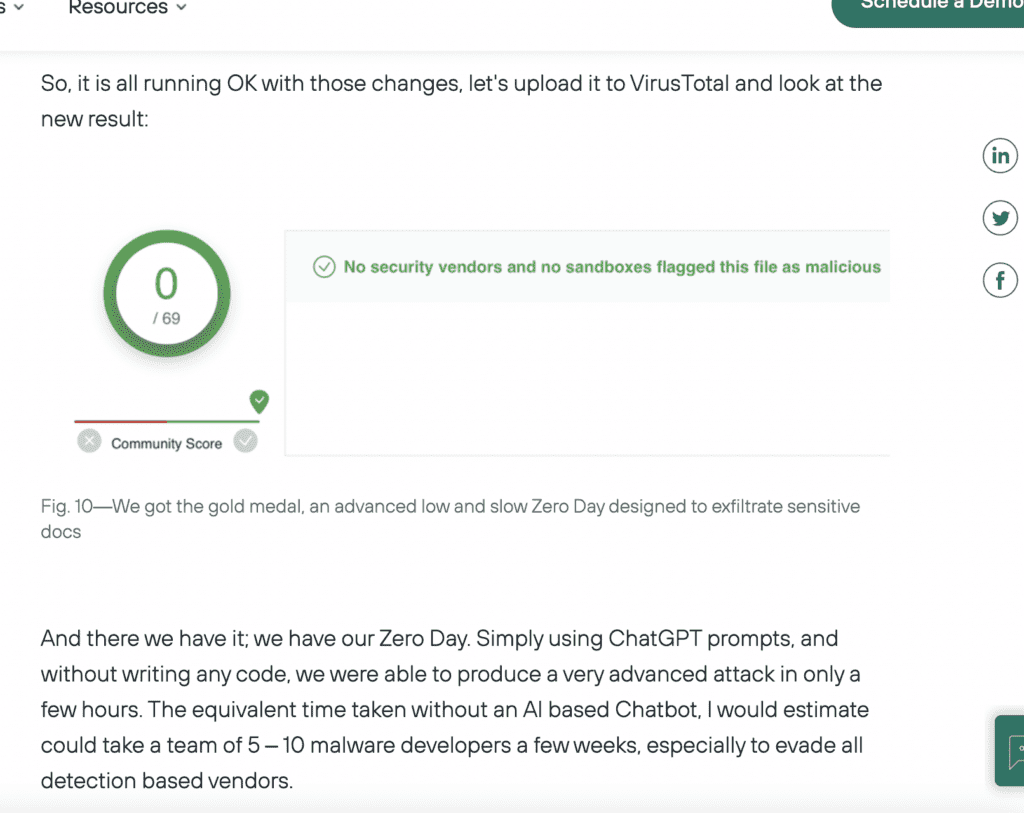

#9: Researchers Build Zero Day Malware That Beats Virus Scanner

April 4th ’23 – A researcher with Forcepoint was able to use ChatGPT to create a zero-day malware with undetectable exfiltration, without writing any code himself. In his summary, the author worried about, “the wealth of malware we could see emerge as a result of ChatGPT.”

#10: Trojan Malware Posing as ChatGPT

Recent reports have indicated that malicious actors have pivoted to taking advantage of the lack of availability for ChatGPT to offer up services for mobile devices and plugins for browsers that claim to bypass or provide additional availability of the service. In these cases, the tools are simply malware designed to take over your system, steal data, or attain credit card information for fraudulent purchases.

These attack vectors have been highly successful in recent months and will most likely continue to proliferate across different versions, different actors, and with different end goals.

March 9th ’23 – While instances #1 and #3 of Malware attributable to ChatGPT are only proof-of-concept, the following two are examples of a real, and significant Malware infection found in the wild.

There are multiple reports of malware in the Apple, Google, and Microsoft stores that are masquerading as ChatGPT apps. According to TrendMicro, these applications are coming in various forms with varying target actions. In some cases the application is used as a loader application for other malicious applications, will subscribe the user to premium SMS services, act as spyware, or attempt to steal data from other applications.

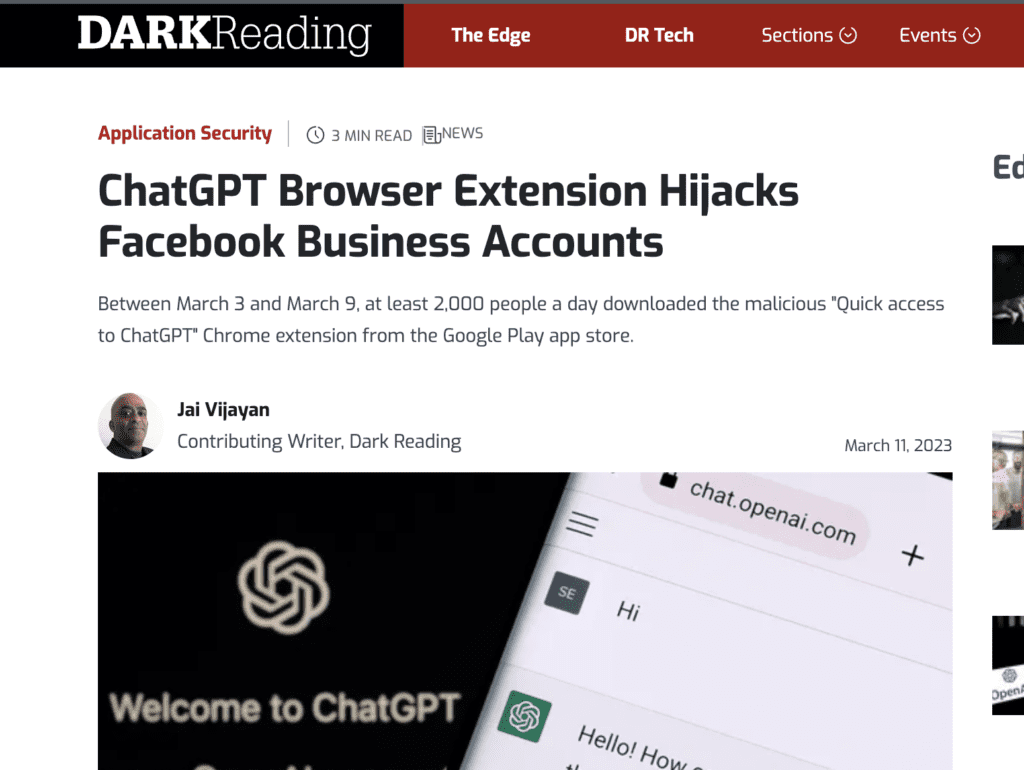

March 11th ’23 – Another form of malware or trojan application that is popping up is browser plugins. DarkReading covers a specific case where users install a Chrome plugin to access ChatGPT as part of their browser to have their business Facebook account taken over by the extension.

Sadly, it was reported that as many as 2,000 people per day downloaded the malicious browser plugin over a 6-day period in March.

ChatGPT Also Affects Security With Copyright Headaches

Security practitioners pay attention to a wider range of risks than just malware and phishing emails. One example is the potential business risk of copyright and intellectual property claims being weakened by the misuse of A.I. tools in the creation of company material.

There has yet to be a case brought in a major court regarding copyright or open-source license infringement for a text-based A.I. tool. However, a recent US Court decision regarding the AI tool Midjourney may provide a precursor to how this may affect individuals and businesses in the future. In the ruling, related to author Kris Kashtanova, “Zarya of the Dawn”, a letter was released that the images generated by the AI tool could not be copyrighted as part of the book. As part of the ruling, the US Copyright Office determined that the images were not able to be copyrighted as they were not generated by a human and therefore were not eligible for copyright protection.

As for open-source licensing, every A.I. tool has an open-source license agreement that stipulates how the material produced by the tool can be utilized. For an organization looking to use the results of a text-based tool, whether code, marketing material, or other use cases, it may be possible to be out of compliance with the open-source agreement if it is not thoroughly reviewed and understood. There are plenty of precedents, in the U.S. and outside of it, where a company was sued and subsequently lost due to not properly following open-source licensing agreements.

These cases combine to create one of the biggest concerns for many security practitioners, namely the protection of assets for a business. Copyright claims or intellectual property claims could be severely weakened with the ruling against Ms. Kashtanova and the lack of understanding of the open-source agreement that is in place for the use of anything generated by an A.I. tool.

While we are in the early stages of A.I. tools being utilized by companies, it can be expected that we will see more court cases impacting companies that have relied on the tools to assist with generating content and intellectual property.

What Happens Next?

From here, most security experts predict a cat-and-mouse game between the malicious actors who will use A.I. to create more sophisticated attacks and security practitioners who will use the same A.I. to improve their defensive capabilities.

On that front at least, we find cause for optimism.

While there is a belief that AI tools will add additional layers of complexity and threat to the cybersecurity landscape, the same tools have already significantly assisted with defensive capabilities. A Global Threat Intelligence Report published in Jan. 2023 showed that AI-based security tools have stopped over 1.7 million malware attacks over 90 days. This data point shows why there is such a large investment in AI-based tools, despite the belief that they will assist with a breach or a cyberattack before long.